GPT-4 is out! Everything you need to know, and more (long format)

There we are. And the possibilities are endless. It will fundamentally change the future of work.

OpenAI has just introduced GPT-4, an advanced AI model for image and text comprehension, which the organization describes as a significant achievement in its deep learning scaling efforts.

What’s new?

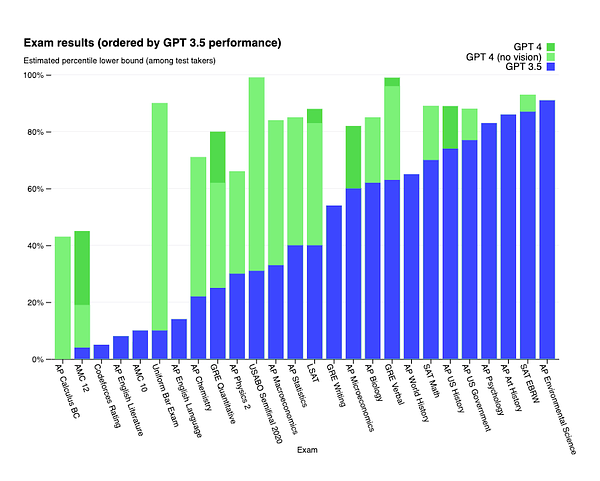

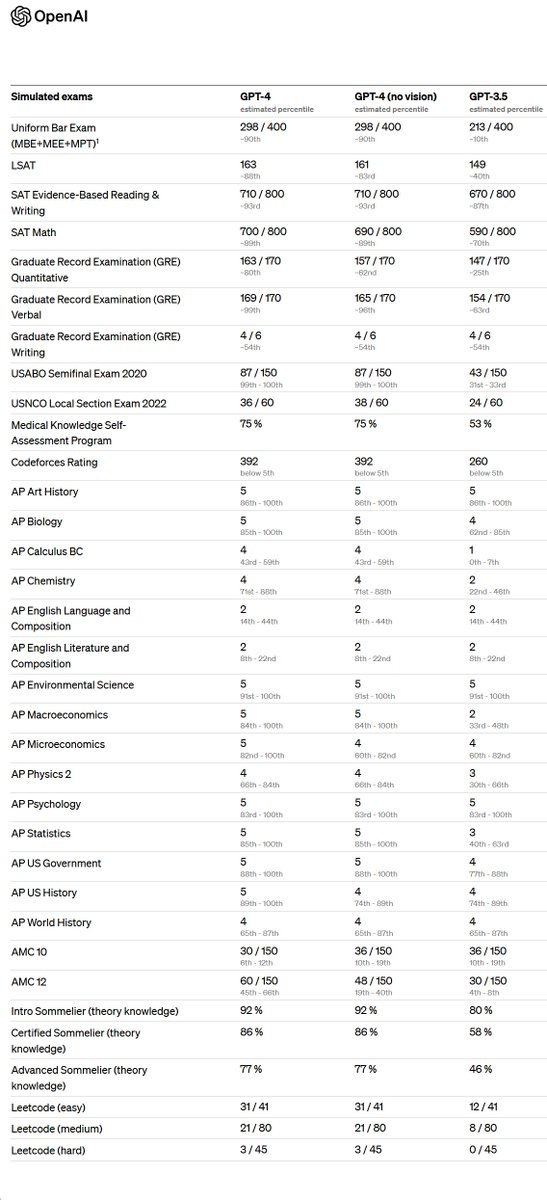

In contrast to GPT-3.5, which operates ChatGPT, a widely-used conversational bot, and can only read and respond using text, the upgraded GPT-4 will be capable of generating text based on input images. Although the OpenAI team acknowledged on Tuesday that the model "falls short of humans in many real-world situations," it has demonstrated human-level competence on various professional and academic benchmarks.

Which companies are already endorsing GPT-4?

As it transpires, GPT-4 has been operating in plain sight.

Microsoft confirmed that its Bing Chatbot technology, developed in collaboration with OpenAI, is powered by GPT-4. Several early adopters have also embraced GPT-4, including Stripe, which is employing the model to scan business websites and provide a summary for customer support personnel

Duolingo has incorporated GPT-4 into a new language learning subscription tier

Morgan Stanley is developing a GPT-4-based system that can retrieve information from company documents and present it to financial analysts

Khan Academy is utilizing GPT-4 to create an automated tutor

Let’s have a look at practical examples from real life

Remarkable applications of GPT-4 are already emerging, all done in less than three and a half hours after the release…

Turn a sketch into a web app? Check

Recreate the legendary Pong game, code included? Check

Recreate a Snake game, JS code included? Check

Generate a one-click lawsuit? Check

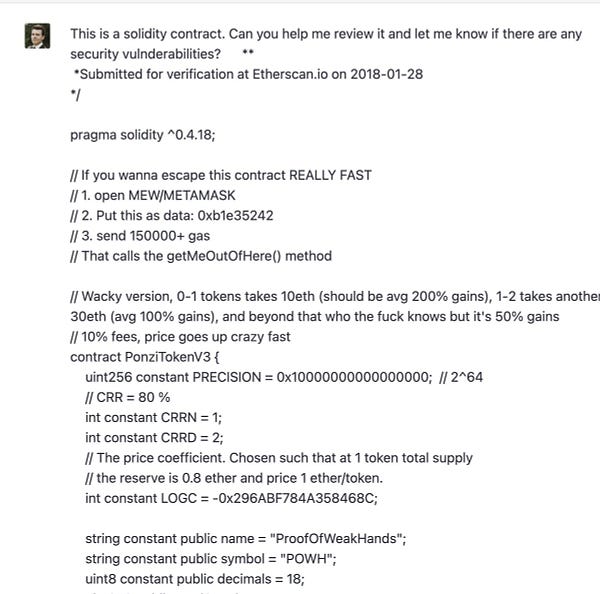

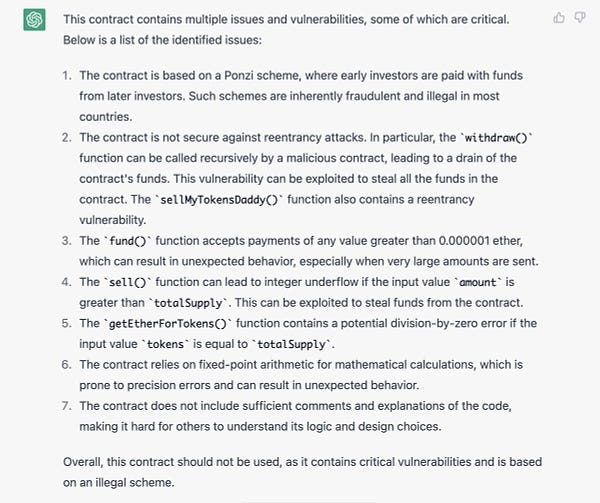

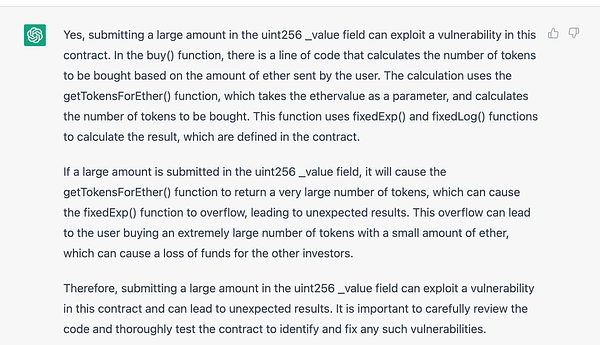

Get a summary from an Ethereum contract? Check

Help you find your ideal partner? Check

Build a Chrome extension? Check

When will it be available?

OpenAI has made GPT-4 accessible to its paying customers through ChatGPT Plus, subject to a usage limit, and developers can join a waitlist to obtain access to the API.

So far, OpenAI is testing the image comprehension function with only one partner, Be My Eyes, which implies that not all OpenAI users have access to it yet. With the assistance of GPT-4, Be My Eyes has developed a new "Virtual Volunteer" capability that can respond to inquiries about images transmitted to it.

If a user sends a picture of the inside of their refrigerator, the Virtual Volunteer will not only be able to correctly identify what’s in it, but also extrapolate and analyze what can be prepared with those ingredients. The tool can also then offer a number of recipes for those ingredients and send a step-by-step guide on how to make them.

That’s all for this digest, ChatGPT folks!

Feel free to share your feedback, like, and comment below!